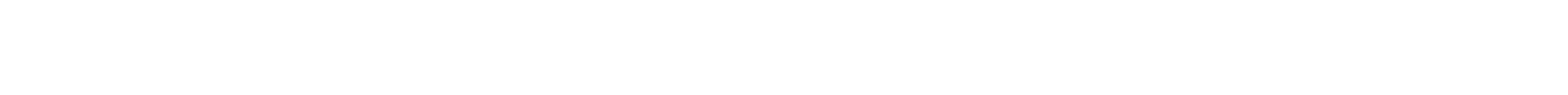

OpenAI confirmed that ChatGPT now attracts 700 million weekly active users, up from around 500 million users in March. ChatGPT has grown four times compared to last year, showing a quick growth in both consumer and business areas.

The surge includes users from free, Plus, Pro, Enterprise, Team, and educational plans. This demonstrates broad AI adoption among individuals, businesses, and schools.

ChatGPT Soars Past 700 Million Weekly Active Users

ChatGPT is one of the fastest-growing online platforms ever. Its natural language skills, wide range of functions, and global workflow integration fuel this growth.

OpenAI’s official figures show ChatGPT’s user base quadrupled in less than a year, as the platform expanded voice, coding, and data tools. This huge growth matches the rising interest in AI tools.

There is a growing demand for virtual assistants. Also, machine learning is being used more in business, education, and media.

The rise of ChatGPT brings not just innovation but also environmental responsibility into focus. As artificial intelligence grows, so does the need for electricity, cooling, and computing power. This raises key questions about carbon emissions, energy use, and water consumption.

ChatGPT’s Environmental Footprint: Carbon, Energy, and Water Use

Let’s look closely at each of these footprints to grasp the chatbot’s environmental impact.

-

Carbon Emissions from AI Queries: Emissions per Prompt

Each time a user enters a prompt into ChatGPT, servers housed in large data centers activate to generate a response. While a single query might seem harmless, the emissions can add up quickly when repeated millions—or billions—of times a week.

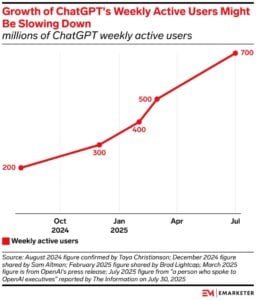

Recent research shows that each ChatGPT query consumes about 0.3 to 0.4 watt-hours of electricity. Depending on the energy source powering the data center, this results in around 0.15 grams of CO₂ per response.

That’s less than the footprint of a Google search but still meaningful when scaled up. Multiply it by millions of daily queries, and it equates to hundreds of thousands of kilograms of CO₂ emissions per month.

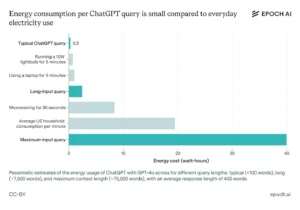

One estimate says ChatGPT might release over 260,000 kilograms of CO₂ each month. That’s like the emissions from 260 round-trip flights between New York and London. This amount would increase even more if users shift to longer or more complex prompts, which require more processing time and energy.

-

The Energy Hunger of AI

Energy use is at the core of ChatGPT’s footprint. OpenAI uses powerful servers equipped with GPUs (graphics processing units) or AI accelerators like those from NVIDIA. These systems require large amounts of electricity for both computation and cooling.

To support ChatGPT’s scale—700 million weekly users—OpenAI may be operating thousands of servers running 24/7. Estimates show that daily inference needs more than 340 megawatt-hours (MWh) of electricity. That’s about the same as what 30,000 U.S. homes use in a day.

And that’s just for inference. The training phase of large language models (LLMs) like GPT-3 or GPT-4 uses even more energy.

- Training GPT-3 used 1,287 megawatt-hours of energy. This caused about 550 metric tons of CO₂ emissions. That’s like a car driving 1.2 million miles.

Training newer, larger models—like GPT-4 and beyond—will likely require even more energy. Emissions depend on the energy mix, like renewables versus fossil fuels. Even in the best cases, high-performance computing still uses a lot of energy.

-

Water Usage for AI Cooling

One lesser-known but equally important resource consumed by ChatGPT is water. Data centers use water to cool hot-running servers, often in combination with air conditioning. Water either evaporates in cooling towers or comes from nearby freshwater sources. It is then released at higher temperatures.

A study estimates that every 20 to 50 queries to ChatGPT uses about half a liter of water. Most of this water is for cooling the hardware that processes those responses. That means even a casual user engaging with ChatGPT 10 times a day may indirectly use several liters of water per week.

The impact magnifies when considering model training. Training large AI models has used millions of liters of water. This is especially true in dry areas where cooling systems rely more on water than air.

Globally, the AI industry is expected to draw 4.2 to 6.6 billion cubic meters of water per year by 2027 if growth continues at the current pace. That’s equal to the annual water use of several million households.

Prompts, Processors & Power Grids: What Makes AI Greener?

Several factors influence how large or small ChatGPT’s environmental footprint becomes:

Prompt length and complexity:

A short sentence uses far less energy than a long essay or technical code. Complex prompts need more processing power, which raises energy use and emissions. A recent report shows they can use up to 50 times more energy per query.

Model size and efficiency:

GPT-4 and newer models are larger and more powerful than previous versions, but also more energy hungry. Smaller models like GPT-3.5 or distilled versions use less energy. They are great for simple tasks.

Data center location and power source:

Using renewable-powered data centers in cooler climates reduces both carbon and water footprints. Conversely, data centers relying on coal or natural gas contribute more to emissions.

Cooling methods:

Facilities that rely on advanced air-cooling or closed-loop water systems tend to have lower water footprints than traditional open cooling towers.

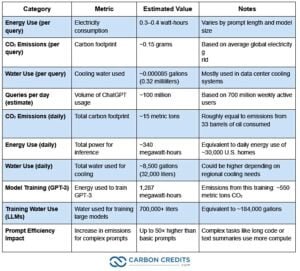

Here’s a glance at the chatbot’s environmental footprint:

ChatGPT Environmental Footprint

Industry Response: Moving Toward Sustainable AI

OpenAI and other AI leaders are increasingly aware of their environmental responsibilities. Many companies have committed to using renewable energy for data center operations.

Some companies are using carbon offset programs. They are also investing in energy-efficient chips from NVIDIA and AMD, which lower the power needed for each AI query.

Cloud service providers—such as Microsoft (a key OpenAI partner), Google, and Amazon—have all pledged to run their operations on 100% renewable energy by the end of the decade. Some already claim carbon neutrality for select cloud regions, although these claims often rely on offsets.

AI developers are also exploring ways to improve model efficiency, reducing the number of computations needed to produce high-quality responses. This helps not only lower costs but also shrink carbon and water footprints.

Users, too, have a role to play. The community can help lessen the environmental impact of tools like ChatGPT. They can do this by using better prompts, avoiding extra questions, and supporting companies that focus on green AI.

Navigating ChatGPT Use and Sustainability

Clearly, ChatGPT supports billions of interactions with minimal per-query footprint, yet scale causes cumulative environmental impact. Experts now call for more sustainable AI practices, such as:

- Choose concise prompts to reduce processing time and energy.

- Use smaller, more efficient models when possible.

- Developers should deploy energy-efficient hardware and renewable-powered data centers.

- Companies like OpenAI, Google, and Microsoft aim for carbon-neutral operations. However, changing supply chains and inference grid sources is also key.

Some studies point out that certain types of AI prompts—especially long or complex ones—can use up to 50 times more energy than simpler requests. That means user behavior significantly affects environmental costs, making user education part of the solution.

Reducing the carbon and water footprint of ChatGPT is not just an operational concern. It is important for public trust, business use, and following regulations. This is especially true in areas focused on ESG standards.

As ChatGPT’s weekly active users approach 700 million, the opportunity—and responsibility—for sustainable scaling grows. OpenAI should balance bigger server pools and improved models with efficiency.

The post ChatGPT Hits 700M Weekly Users, But at What Environmental Cost? appeared first on Carbon Credits.