AI’s Water and Electricity Usage: Separating Truth from Myth

Artificial intelligence has become one of the most transformative forces of our time, reshaping industries and influencing daily life. But as AI models like ChatGPT and others have grown in scale, a pressing question has emerged: what is their real environmental footprint? Specifically, how much water and electricity does AI actually use — and how much of what we hear is misleading?

The conversation around AI’s resource consumption is complicated, and it’s easy for numbers to be twisted in ways that sound alarming or trivial depending on the storyteller. At the heart of the debate are two seemingly contradictory statements: one from Sam Altman of OpenAI, and another from a Morgan Stanley projection.

Two Numbers, One Confusing Story

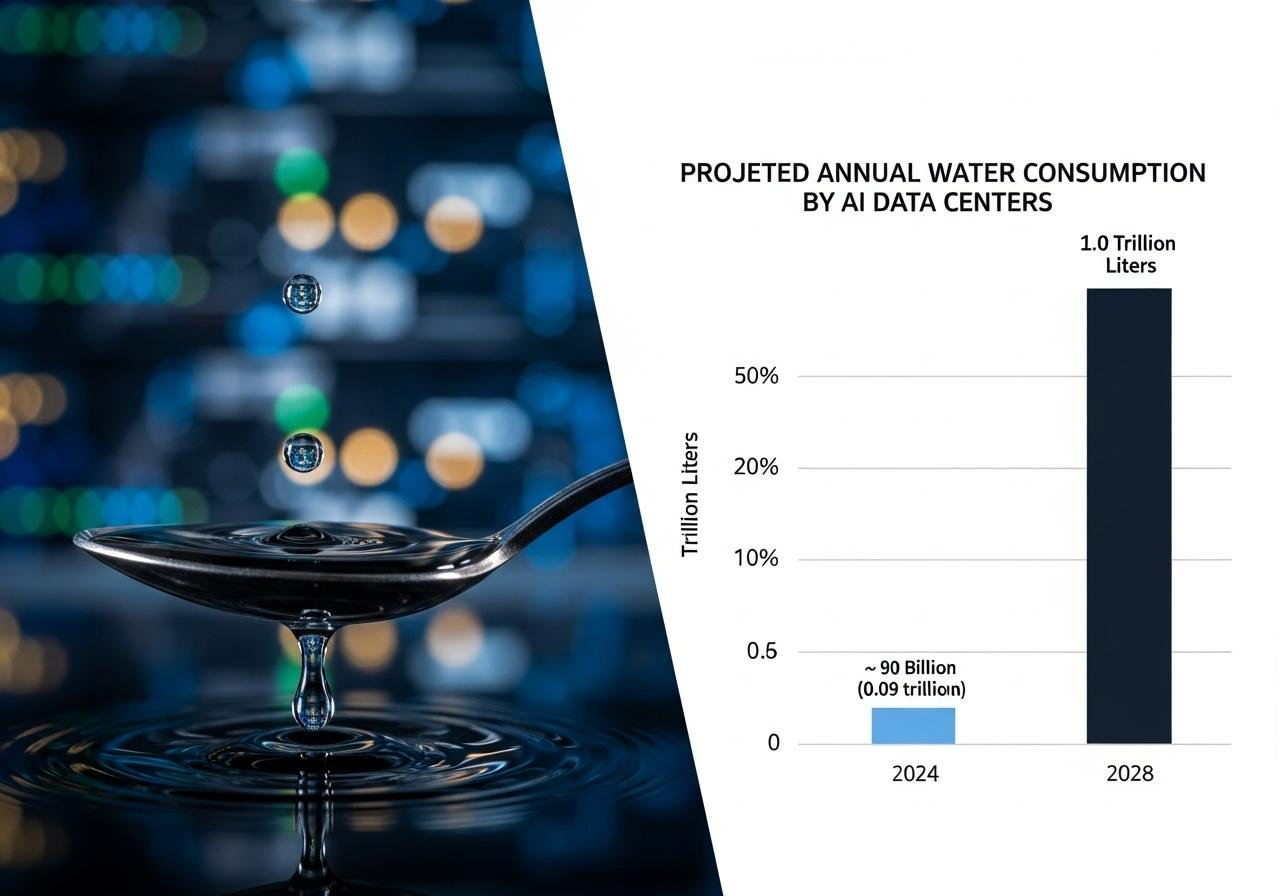

Sam Altman has said that an average ChatGPT query uses around 0.000085 gallons of water — roughly one-fifteenth of a teaspoon. That sounds negligible. Yet, Morgan Stanley’s analysts predict that by 2028, AI data centers could consume around one trillion liters of water annually — an elevenfold increase from 2024 levels. How can both be true?

Why AI Data Centers Use Water

Every data center that powers AI models is essentially a warehouse full of high-performance computer chips. These chips generate enormous amounts of heat, and their performance — and lifespan — decline when temperatures rise. To maintain optimal operation, facilities rely on cooling systems, often involving water.

Many data centers use what’s known as evaporative cooling. This process turns clean water into vapor, which then carries heat away from the system. Some facilities recycle water or use non-potable sources, but most still depend heavily on municipal water — the same system that supplies homes and businesses. That means the water going into AI infrastructure competes with urban water needs, at least at the supply level.

The Hidden Footprint: AI Model Training

Altman’s teaspoon-per-query figure doesn’t account for one of the most resource-intensive parts of AI’s lifecycle — training the models themselves. Training a large model like GPT involves running countless GPUs for weeks or even months, continually processing massive datasets. The training phase can account for up to half of an AI system’s total resource use.

When data centers train a new model, the energy and water consumed should, in theory, be distributed across every eventual query made to that model. However, since companies like OpenAI don’t publicly share the details of training time, infrastructure size, or water sources, it’s nearly impossible to calculate an accurate per-query footprint. This lack of transparency enables both sides of the argument — those minimizing AI’s impact and those exaggerating it — to shape their own convenient versions of reality.

Electricity’s Hidden Water Cost

Even if data centers improve their direct water use efficiency, the electricity that powers them still comes with its own hidden water footprint. In the United States, about 40% of all freshwater withdrawals are linked to thermoelectric power plants — those that use steam to generate electricity from coal, gas, or nuclear energy.

These plants rely on water for cooling: steam drives turbines to make electricity, and then the same steam must be recondensed before the process repeats. This is achieved by running the steam through pipes surrounded by cold water drawn from nearby rivers, lakes, or oceans. A small portion of this water evaporates, and the rest is released back into the source — typically warmer than before, which can damage aquatic ecosystems.

When large-scale AI systems consume power generated from these sources, part of that power plant’s water use can reasonably be attributed to AI. This is why some reports on “AI water use” include the water flowing through thermoelectric plants — though technically, it’s not the same as the clean, municipal water that cools servers directly.

Different Kinds of Water, Different Kinds of Impact

Not all water is created equal. Municipal water is purified and made safe for drinking, while water used for power generation is usually drawn straight from natural sources and returned to them afterward. There’s also “ultrapure” water used in semiconductor manufacturing — an essential step in producing the chips that make AI possible. That ultrapure water is energy-intensive to produce, but used in small quantities compared to cooling water.

This distinction matters because while total global water use sounds like one number, the local impact depends on what kind of water is being consumed, and where. A data center in the Pacific Northwest, where water is plentiful, poses a far different sustainability challenge than a similar facility built in an arid region like Arizona. Water scarcity is local, and using potable water in a drought-stricken area can strain communities and ecosystems far more than the same usage elsewhere.

When Context Changes Everything

Water usage is only part of the sustainability picture — and often not the largest. For most data centers, electricity is the bigger environmental challenge. Energy generation not only impacts water systems but also determines greenhouse gas emissions. As AI models scale, the surge in electricity demand may outpace renewable energy growth, straining grids and complicating national carbon goals.

Perspective: AI vs. Agriculture

Even with the most aggressive projections, global AI data centers are expected to use about 260 billion gallons of water per year by 2028. That’s a big number — until you compare it to agriculture. In the United States alone, corn production consumes around 20 trillion gallons annually. That’s nearly 80 times more water than all AI data centers combined.

And here’s the kicker: only about 1% of corn grown in the U.S. is eaten directly by people. About 40% goes to ethanol production for fuel, meaning enormous amounts of water are spent growing crops that are ultimately burned in cars. Each gallon of ethanol carries an irrigation footprint of roughly 1,500 gallons of water before refining. In perspective, AI’s footprint — while not insignificant — is a fraction of what we already accept as normal for other industries.

What Really Matters Going Forward

The debate around AI’s water and energy use reveals more about our collective understanding of sustainability than it does about ChatGPT itself. The truth is that resource analysis is messy. Whether a number looks large or small depends entirely on what’s included, what’s excluded, and where that resource is being used.

That’s why transparency from AI companies is so crucial. Without clear reporting on how much water and electricity go into training, running, and maintaining models, the public conversation will remain full of half-truths. Meaningful progress requires both data and context — along with the willingness to confront tradeoffs honestly.

And while AI’s direct cooling water might draw headlines, it’s the indirect consequences of energy demand — increased emissions, grid expansion, and growing dependence on fossil fuels — that could shape our environmental future far more significantly.

Final Thoughts

AI’s environmental footprint is real, but it must be viewed in proportion to other industrial uses and through the lens of local context. The difference between sustainable and irresponsible growth lies in where and how companies build. A hyperscale data center in a water-stressed desert, reliant on fossil electricity, presents vastly different outcomes than a renewable-powered, air-cooled facility in a water-rich region.

As technology and sustainability increasingly intersect, the challenge isn’t just reducing AI’s footprint — it’s designing an AI ecosystem that reflects smarter resource management, transparent data, and regionally appropriate planning. The myths will fade as transparency grows, but the need for thoughtful choices remains constant.

This article is for informational purposes only.

Reference:

The post Ai Water and Electricity usage truths and myths appeared first on Green.org.